Run integration tests using Docker inside a dockerized GitLab runner

In this post I’ll show how I run integration tests using Docker inside a dockerized GitLab runner.

Introduction

For a while I struggled writing integration tests for my Go projects. The issue being that I want to have a setup that executes them automatically and repeatable, without the need to manually install or start/stop dependencies when running integration tests.

I found the solution combining GitLab Runner and dockertest.

I’m already using a self-hosted GitLab instance. Since GitLab runner can be run in Docker container each PC that has Docker installed can become a build server.

dockertest is a tool which can be used to start Docker container from inside Go code. It is used to start the containers running the dependencies (i.e. database) needed for the tests. The advantage is that integration tests can be executed without having to remember to start all the required dependencies manually.

Using this locally is quite easy since the tests are executed directly on the developer’s machine and dockertest uses the Docker installation on that machine.

Running the same tests inside a Docker container (in our case a GitLab runner) becomes a problem since the test itself needs to start a Docker container. To solve the issue we can use a concept called “Docker in Docker” (dind). It does what it sounds like. It allows to use the docker command inside a Docker container.

tl;dr

- Configure the GitLab runner to run in privileged mode

See here for details. - Add a Docker in Docker service to your build job allowing you to use Docker in your integration tests

services:

- docker:dind

variables:

# Set the Docker host to be the one of the previously created service (This forwards the user of the docker command to this service instead of running it inside the container of this build job.)

DOCKER_HOST: tcp://docker:2375

# By GitLab recommended storage driver when using Docker-in-Docker (see https://docs.gitlab.com/ee/ci/docker/using_docker_build.html#use-the-overlayfs-driver)

DOCKER_DRIVER: overlay2

# Disable the need for certificates when this build job communicates with the Docker service

# If you want to secure the communication between the container of this build job and the Docker service you have to slightly change the configuration of the GitLab runner. (see https://about.gitlab.com/blog/2019/07/31/docker-in-docker-with-docker-19-dot-03/)

# Since in my case everything is running on the same server I can live with this communication not being encrypted.

DOCKER_TLS_CERTDIR: ""

# Use this environmental variable when connecting to the database inside the Go code

DATABASE_HOST: docker

What we want to do

The goal is to have an integration test that can be executed using a dockerized GitLab runner.

You can find the complete code on GitHub.

The Go program

Looking at the main function of the program you see that it does nothing. For the purpose of this demo it is irrelevant what the program does.

The important part is that our program uses migrate to manage the database. migrate is a tool that allows us to write SQL scripts which are then executed in a defined order. migrate tracks which scripts have already been executed and only runs the scripts that are missing.

The code to be tested

This logic is contained inside the MigrateDatabase function.

When called it checks the current version of the database and runs any missing migrations to update the database to the latest version.

// MigrateDatabase updates the database to the latest version if it isn't already up-to-date

//

// Uses github.com/golang-migrate/migrate.

func MigrateDatabase(databaseName, pathToMigrations string, db *sql.DB) error {

log.Println("begin migration of database")

// Setup the driver which is used by golang-migrate to perform the migration

driver, err := postgres.WithInstance(db, &postgres.Config{})

// Setup the instance on which the migration is executed

instance, err := migrate.NewWithDatabaseInstance("file:"+pathToMigrations, databaseName, driver)

if err != nil {

log.Println("error creating pre-conditions for database migration")

return err

}

// Get the version of the database before the migration. If this is executed on a new database an error is returned that "no migration" exists.

version, dirty, err := instance.Version()

if err != nil && err.Error() != "no migration" {

log.Printf("error determining current database version: %v", err)

return err

}

log.Printf("current database version: %v (dirty: %v)", version, dirty)

// Run the migration

if err := instance.Up(); err != nil {

// If the database is up-to-date this command will return and error with the content "no change". From this applications point of view this is not an error but just means no migration is needed.

if err.Error() == "no change" {

log.Println("database is up-to-date")

return nil

}

// Any other error than "no change" indicates an issue while migrating the database

log.Println("error migrating database")

return err

}

// Get the version of the database after the migration

version, dirty, err = instance.Version()

if err != nil {

log.Println("error determining new database version")

}

log.Printf("new database version: %v (dirty: %v)", version, dirty)

return nil

}

This is the logic we want to test. I prefer unit tests but in this case an integration test makes more sense.

Testing the program

Our test case is simple:

Given an empty database

When executing the migration

Then the database should be migrated to the latest version

Preparing for the test

Before executing the test we must ensure the pre-conditions are met. In this case it is a Docker container in which a PostgreSQL database is running.

To achieve this we can make use of a TestMain function. It allows us to setup what ever is required by the tests that will be executed.

func TestMain(m *testing.M) {

pool, err := dockertest.NewPool("")

if err != nil {

log.Fatalf("error connection to docker. error: %v", err)

}

// Read the values from the config. (Environmental variables will override what is defined inside the "config.env" file

configuration := config.InitializeConfig("..")

// Setup and start the Docker container for the PostgreSQL database. (The environmental variables are the same as if we would start the container on the command line.)

postgresContainer, err := pool.Run("postgres", "13", []string{

"POSTGRES_PASSWORD=" + configuration.DatabasePassword,

"POSTGRES_USER=" + configuration.DatabaseUser,

"POSTGRES_DB=" + configuration.DatabaseName,

})

if err != nil {

log.Fatalf("error starting postgres docker container: %s", err)

}

// The port mapping for the Docker container is randomly assigned. Here we ask the container on which port the database will be available.

port := postgresContainer.GetPort("5432/tcp")

// Establish the connection to the database

DB, err = Connect(configuration.DatabaseHost, port, configuration.DatabaseName, configuration.DatabaseUser, configuration.DatabasePassword, configuration.DatabaseOpenTimeout)

if err != nil {

log.Fatalf("error trying to connect to database: %v", err)

}

// Execute the tests

code := m.Run()

// Ensure all containers created are deleted againg

if err := pool.Purge(postgresContainer); err != nil {

// Even if this fails there is nothing more we can do than logging it. The test execution will be finished after this anyway.

log.Printf("error purging resources of integration tests: %v", err)

}

os.Exit(code)

}

If the setup is successful the tests are executed once m.Run() is reached. At this time a PostgreSQL database has been created and made available in the DB variable.

Executing the test

Now that we have a running database inside a Docker container we can execute the actual test.

The test does the following:

- Ensure the database does not contain any version information

- Execute the migration

- Ensure the database now contains version information matching the SQL scripts

// TestMigrateDatabase tests the SQL migration scripts by checking the migration version before and after the migration is executed.

func TestMigrateDatabase(t *testing.T) {

// Before the migration runs we expect the version information not to be present

// an error is therefore expected.

_, _, err := extractMigrationVersionFromDatabase(DB)

if err == nil {

t.Fatalf("error determining migration version from database: %v", err)

}

// Run the migration

err = MigrateDatabase(

"integration-test",

// Usually hard coding isn't great. But in this case the test would fail if the migrations are moved. That is a good reason for this test to fail.

"//migrations",

DB,

)

if err != nil {

t.Fatalf("error migrating database: %v", err)

}

// Based on the migration scripts the expected version is determined

versionScript, err := extractMigrationVersionFromScripts("migrations")

if err != nil {

t.Fatalf("error determining the migration version: %v", err)

}

// After the migration we again get the version information from the database

versionDatabase, dirty, err := extractMigrationVersionFromDatabase(DB)

if err != nil {

t.Fatalf("error extracting version from database after migration: %v", err)

}

// Check that the database is clean

if dirty {

t.Fatalf("the database version after migration was 'dirty'. it was expected to not be 'dirty'.")

}

// Check that the version in the database matches the version based on the migration scripts

if versionScript != versionDatabase {

t.Fatalf("expected migration version in database and migration scripts to match. version database: %v version scripts: %v", versionDatabase, versionScript)

}

}

Locally we can already run this test using go test ./.... (Use go test ./... -v to see the log output.)

Automating the test execution

GitLab Runner setup

To automate the test we need to setup a GitLab runner. Use the following command to create the runner.

docker run -d --name gitlab-runner --restart always \

-v /var/run/docker.sock:/var/run/docker.sock \

-v gitlab-runner-config:/etc/gitlab-runner \

gitlab/gitlab-runner:latest

Before being able to use the runner it has to be registered to GitLab.

docker run --rm -it -v gitlab-runner-config:/etc/gitlab-runner gitlab/gitlab-runner:latest register \

# The name of this runner

--description=buildy-docker-privileged \

# A list of tags that allows us to define what jobs will be executed by which runner.

--tag-list=docker,privileged \

# By default new runners are locked. This unlocks them without having to go into the GitLab UI.

--locked=false \

# Allow the runner to run build jobs that have no tags defined

--run-untagged=true \

# Make this a Docker runner

--executor=docker \

# The default image used for all build jobs (can be override on a per job basis)

--docker-image=ubuntu \

# Allow this Docker container to access the host it is running on in order to start new Docker

# container on the host.

--docker-privileged=true

The most important part in this script is --docker-privileged=true this is required in order to run the Docker in Docker service which we will define in our build job.

Setting this parameter to true has security implications. The code executed inside this container can access the Docker host. Malicious code is therefore not contained to the container! (See here for more details.)

Since I have a dedicated build server this is not an issue for me. I would not recommend running this setting on machines which contain important/sensitive data or host other applications.

Configure the build job

Using GitLab we define the build job inside the .gitlab-ci.yml.

As a bonus this build pipeline not only executes the test but also publishes the test result to the GitLab pipeline.

stages:

- test

run-tests:

stage: test

image: golang

needs: []

# The tags a GitLab runner must have in order to run this job

tags:

- docker

- privileged

# Start the dependent service which allows us to use Docker inside a Docker GitLab runner

services:

- docker:dind

variables:

# Set the Docker host to be the one of the previously created service

DOCKER_HOST: tcp://docker:2375

# By GitLab recommended storate driver when using Docker-in-Docker (see https://docs.gitlab.com/ee/ci/docker/using_docker_build.html#use-the-overlayfs-driver)

DOCKER_DRIVER: overlay2

# Disable the need for certificates when this build job communicates with the Docker service

DOCKER_TLS_CERTDIR: ""

# Override the value inside the "config.env" file so the test connects to the database running inside the Docker-in-Docker service

DATABASE_HOST: docker

script:

# Install the tool used to create a GitLab compatible report of the executed tests

- go get -u github.com/jstemmer/go-junit-report

# Create the folder in which the test report will be stored

- mkdir -p build/output

# Run the tests and save the report in the previously created folder

- go test ./... -v 2>&1 | go-junit-report > build/output/test-result.xml && go test ./...

artifacts:

reports:

# Set the path under which GitLab can find the test report

junit: $CI_PROJECT_DIR/build/output/**.xml

Here I’m using the tags docker and privileged to define which runner can pick up this job. Since most jobs don’t need privileges I like to run these on runners without them.

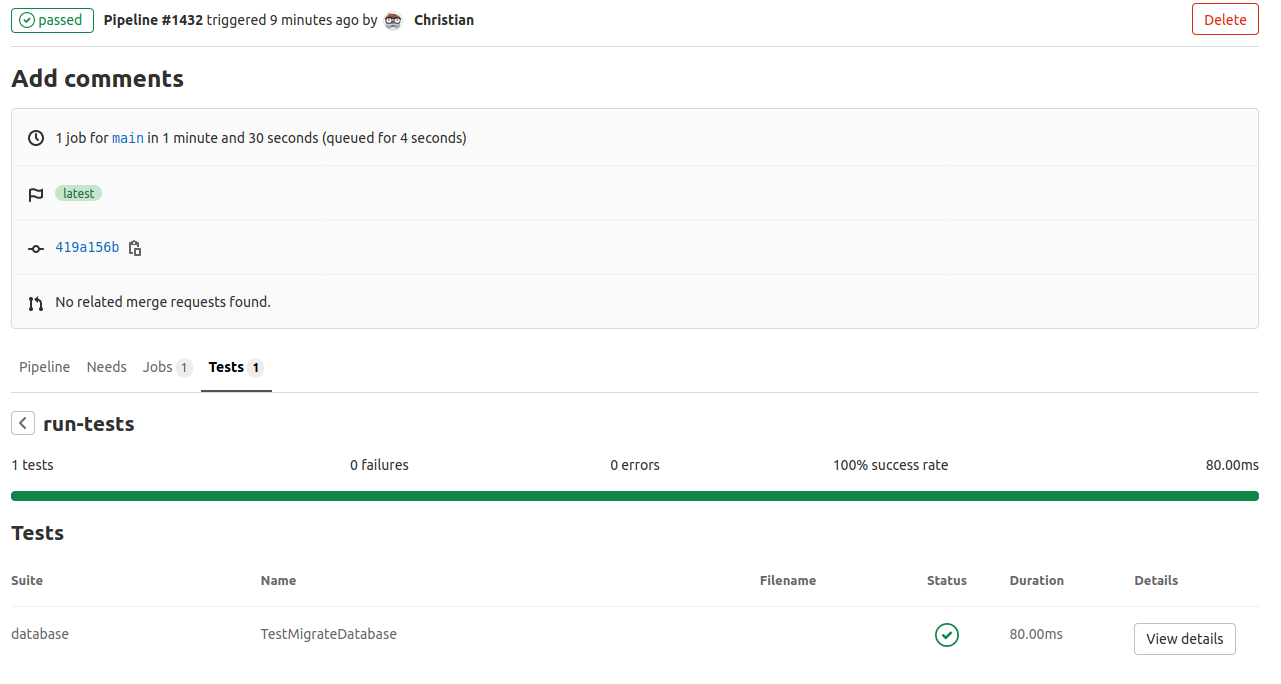

Seeing the results

With every push to the repository the build job will now be executed, the test run and the result published to the pipeline.